Evolving Solutions Observations on the Announced IBM z17 Mainframe

On Tuesday, April 8, 2025, IBM announced the replacement of their successful enterprise class Mainframe known as the z16. The new mainframe, known as IBM z17TM (surprise!), is yet another example of excellent IBM mainframe design and engineering. That comes as no surprise, after all it’s IBM.

There is quite a bit to unpack as part of this announcement, so let’s get started.

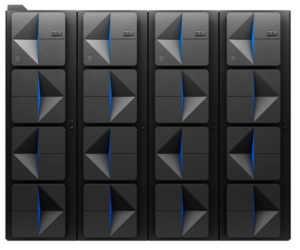

First, a picture is still worth a thousand words – here is IBM’s z17 Mainframe

For those familiar with IBM’s Z System family you might be inclined to say “it look’s just like it’s Z16 predecessor.” On behalf of Evolving Solutions, I would respond: looking at the covers – yeah, your right. But that is where the similarity ends.

What’s inside the covers?

When you compare the IBM z17 against the prior generation, you quickly realize that it offers more processor cores, significantly more memory, and greatly enhanced AI capabilities compared to the z16.

The machine type number is 9175 with four features known as: Max43, Max90, Max136, Max183. There is also a new build z17 available under feature number Max208. As you may be aware, the numeric value tied to the feature is the number of cores available to the client. In addition, with proper planning, upgrading from one feature to another is possible non-disruptively at the client location, e.g. non-disruptive upgrade from a Max43 to a Max90.

Comparing the above information side by to to the z16 predecessor, the IBM z16 offered features Max39, Max82, Max125, Max168 and the Max200. Like the Max208, the Max 200 is a factory build option only.

Clearly, the z17 offers additional cores and this translates into improved scale for our clients. Single thread performance is up 11% versus the z16, the added cores offer up 15-20% additional capacity growth dependent upon workload. There is now 60% more memory available on this new server, 64 Terabytes, up from 40 Terabytes on the z16. The additional memory will be put to good use for traditional workloads and even more so by clients that are investing in AI strategies that are multi-model centric. A Multi-Model AI strategy refers to deploying both predictive AI workloads (e.g. inferencing) as well as standing up Large Language Models that will make use of the new IBM SpyreTM AI Accelerator cards.

Lastly all of this increased capacity, performance and processing power is possible while spending less on your electric bill! The estimate is that z17 mainframe energy consumption will drop by 17% .

How is that even possible? Next section please.

Continued Platform Innovation

DPU – I/O Engine

How is it possible to do more and yet consume less energy? Some might begin to suggest, okay what RAS1 characteristic(s) were compromised? I can say with confidence, nothing was compromised; the system was simply refined given the new design architecture.

What IBM did to improve the power consumption posture of this platform was to take a long-standing hardware component known as the System Assist Processor (SAP) and move it out from it’s own set of dedicated cores onto the Telum II processor chip for better integration. IBM Z has a long history of offloading IO processing from the General Purpose processors onto the SAP. There is now a new feature known as the Data Processing Unit (DPU) located on the Telum II chip that performs I/O processing and acceleration for both storage and network protocols.

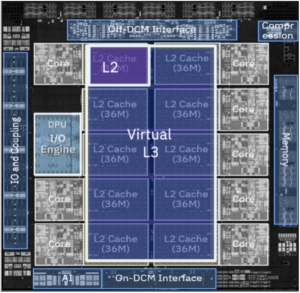

Below is an image of the IBM z17 PU (processing unit) Chip Design. This chip, designed by IBM engineers is fabricated in 5nm FinFET technology by Samsung Foundries. You can see the DPU I/O Engine on the left-side of the chip about half-way up the .

IBM Spyre AI Accelerator

The IBM z16 mainframe was announced back in 2022 and was the first mainframe server to offer AI capabilities integrated onto the processor chip known as Telum. What made that first Telum chip unique was the introduction of a then-new AI accelerator designed by IBM Research and IBM Infrastructure. This Telum chip allowed a mainframe client to perform inferencing in real time against all of their online transactions with a promise from IBM that the added latency would be a mere 1 millisecond per transaction.

Following IBM z16 general availability, IBM continued their research in AI accelerators, developing a new artificial intellence unit (AIU) prototype chip that expanded the single accelerator in the z16 Telum to 32 accelerator cores in the AIU prototype.

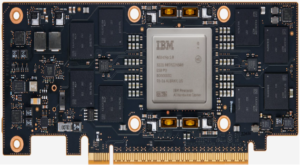

This IBM prototype chip is now incorporated into the z17 mainframe as an enterprise grade product known as the IBM Spyre AI accelerator. The IBM Spyre AI accelerator shares a similar architecture to that first prototype hosting 32 individual accelerator cores produced using 5 nm node process technology. Each Spyre is mounted on a PCIe card. Cards can be clustered together. As one example, a cluster of 8 cards adds 256 additional accelerator cores to a single IBM z17 system.

The AI accelerator assembly (IBM Spyre) is a PCIe adapter that is plugged into an I/O Drawer. Up to a maximum of 48 features will be offered, plugged in redundant pairs for availability. The Spyre AI accelerator allows a z17 client to bring generative AI capabilities to their mission critical workloads. The Spyre AI accelerator is the first system-on-a-chip that will allow future IBM Z systems to perform AI inferencing at an even greater scale than available today.

This Spyre AI accelerator may sound similar to an NVIDIA GPU and for those familiar with that technology, you know that NVIDIA GPUs are loosely measured using a metric known as TOPS (Trillions of Operations Per Second). From a planning and positioning standpoint, the IBM Spyre accelerator offers up to roughly 300 TOPs. TOPS is a good starting point to discuss AI accelerator performance, as you can see from the IBM z17 announcement, reduced energy costs is a key requirement. IBM, in designing Spyre, focused on optimizing power and balancing performance for enterprise AI use cases.

IBM has designed the Spyre architecture to offer greater levels of efficiency when processing AI tasks when compared to industry-standard CPUs. For example, most AI calculations involve matrix and vector multiplication. With Spyre, the chip has been designed to send data directly from one compute engine to the next, leading to energy savings. Spyre also uses a range of lower precision numeric formats; This ensures that client AI models running on this technology will be far more energy efficient and far less memory intensive.

IBM has announced well over 250 potential use cases. Evolving Solutions recommends that Clients plan-ahead for the future implementation of Spyre adapters which are planned for general availability 4th quarter of 2025. Clients must estimate the number of sets of IBM Spyre adapters they will require. A set will consist of 8 Spyre cards in an I/O drawer that will form a logical cluster.

The new Spyre AI Accelerator will lead to exciting potential new use cases for IBM Z. A system equipped with a Spyre cluster could leverage far more complex AI models to identify intricate fraud patterns that a less sophisticated model might have missed.

IBM z17 sprinkles in a little RAS

In my 4 ½ decades supporting IBM Mainframe server technology, I have found that at the announcement of any new server, IBM will sprinkle in a little Reliability, Availability, and Serviceability (RAS).

Why is this important? RAS promotes continued incremental improvement in availability that is why.

Case and point, as was called out during the keynote where Tina Tarquinio asked the Bank of Montreal attendee what allows BMO to rest easy at night, the representative stated that the platforms base of 8 nines (99.9999999) of reliability allow mainframers to rest easy.

By the way, Eight nines of availability, or 99.999999% uptime, represents an extremely high level of system reliability, allowing for only a tiny fraction of downtime per year, roughly 315 milliseconds.

How is this achieved? One way is through component integration. A practical example of this is the latest FICON card used to feed the beast (e.g. read and write to data stores). IBM’s z16 FICON card offers up 2 ports per card. Each port represents 1 physical path, the operating system supports up to 8 paths to disk to perform read and write operations. Simple math says we need 4 cards for 8 paths. The z17 FICON card now serves up 4 paths per card, now only 2 cards. Fewer cards equates to fewer components driving greater levels of component integration which, in turn drives higher levels of availability .

In closing

IBM’s z17 ME1 mainframe design leveraged well over 39 personas as part of the design process. The new z17 allows you to process more transactions, score more models (up to 450 Billion inferences per day), scoring each one with 1 ms response time and use less power.

If you are interested in learning more about the IBM z17 mainframe, or further explore breathing new life into your existing mainframe platform, let’s talk.

1 RAS stands for Reliability, Availability, and Serviceability. It is the ability of a system to provide resilience starting with the underlying hardware all the way to the deployed application.

2 Statement of general direction: IBM Spyre AI Accelerator is planned to be available starting in 4Q 2025, in accordance with applicable import/export guidelines.